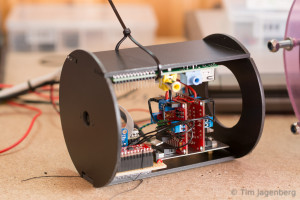

For the Maker Faire coming to Trondheim later this month, I’m currently building a simple submersible remotely operated vehicle. The concept is based on a plumbing tube used for the housing and submersible electric pumps as motors. A Raspberry Pi with Camera Module will deliver the live video feed via ethernet cable. The remote control is managed via a serial link to a Teensy 3.1 with sensors and motor controllers. The communication will be implemented using MAVLink, which enables the use of the QGroundControl station.

After initially testing whether the tube could sustain the pressure at up to 12m water depth, pieces are now falling into place. The serial communication via MAVLink works and just needs a little performance tweaking. It can transmit the manual controls from a game-pad down to the ROV controller and the telemetry back up to the ground station. Telemetry data consists of air-pressure and temperature in the body (MPL115A2) as well as orientation data gathered from the IMU/AHRS (MPU-9150). The ground station visualises the temperature and pressure as line-graphs and uses the orientation information for an artificial horizon.

The plumbing tube of the housing is sealed with two acrylic-glass windows. One 6mm one in front of the camera and three 4mm layers in the rear end. The three layers form tunnels for the cables going out to the motors and up to the ground control station. I hope with plenty of silicone this will be water tight.

If anyone has a good idea how to set up a basin/pool to demonstrate the ROV in, let me know.

Four weeks to go! 🙂

Hi Tim,

Interesting article, nice to read a very similar project I am working on. Could you share with me the details about the artificial horizon? I would like to implement it, but I lack the knowledge. At the moment I have python scripts to return the sensor data via SSH to the surface and the live video via JWplayer. Control is via an USB joystick with a laptop.

Thanks in advance,

Niels

Hei Niels,

I implemented the artificial horizon based on a MPU9150 9DOF IMU from Sparkfun https://www.sparkfun.com/products/11486 , but it’s a pain to get it working right. I would suggest to look into the new Adafruit BNO055 http://www.adafruit.com/products/2472 9DOF IMU, it seems more user friendly.

This data is transmitted to the ground station via MAVLink protocol as a ‘ATTITUDE_QUATERNION’ message. https://pixhawk.ethz.ch/mavlink/#ATTITUDE_QUATERNION

Greetings

Tim